Data Profiling

Run on-demand statistical analysis of your source fields to understand data patterns and quality.

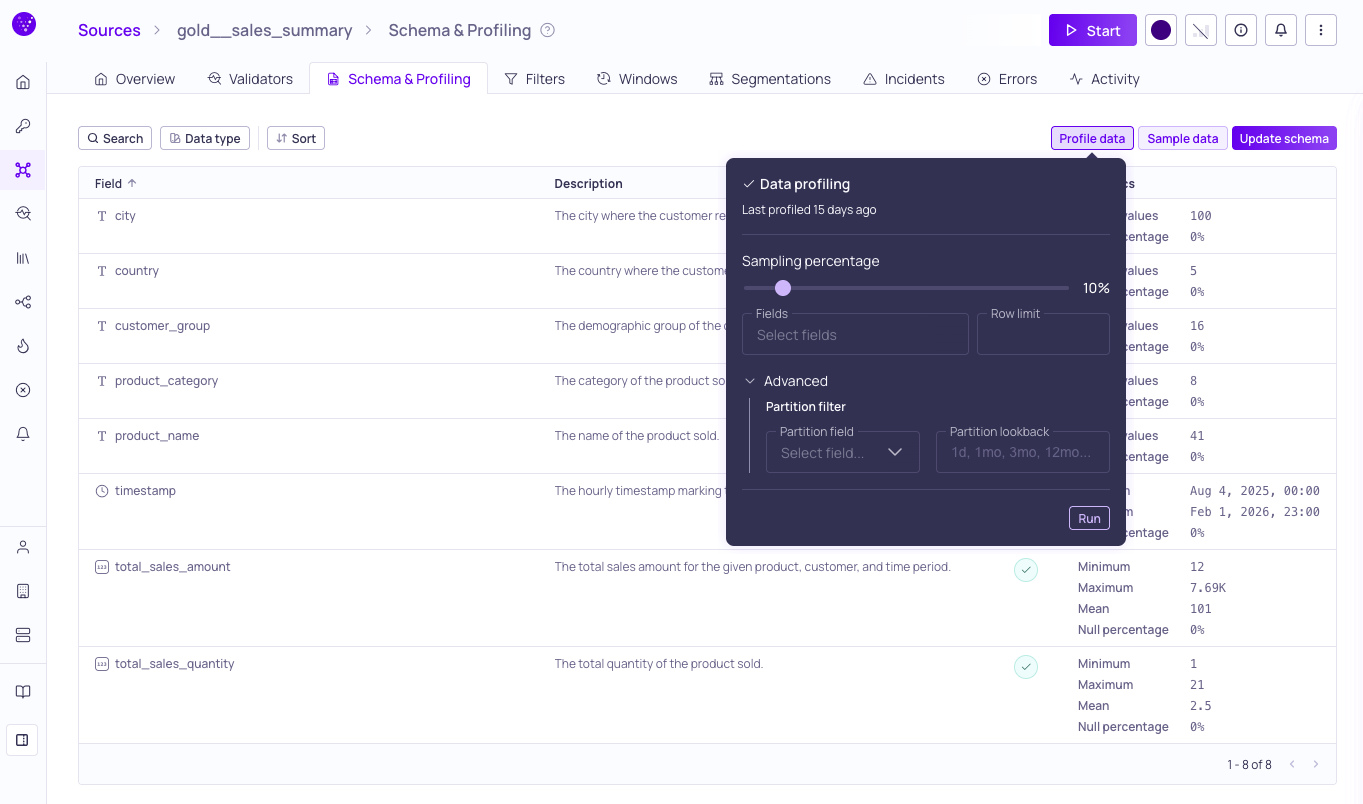

Data profiling configuration options, with results shown in the table

Data Profiling provides statistical analysis of the data in your source or catalog asset at the table, field, and segment level. Use it to understand the distribution of values in your data, identify quality issues, and inform your validator setup. Validio supports both on-demand profiling from the UI and continuous profiling through validators.

How Profiling Works

Profiling operates at different granularities and across both data values and metadata.

Profiling modes

Validio offers two ways to profile your data:

- On-demand profiling — Trigger a one-time profiling run from the Schema & Profiling tab. This is useful for initial exploration of a new source or for spot-checking data before configuring validators. See Running a Profile below.

- Continuous profiling — Run profiling automatically on a configurable schedule (hourly, daily, weekly, monthly, or other cadences) or trigger it as part of a data pipeline. Continuous profiling is configured through validators, which monitor your data on an ongoing basis and alert you when metrics deviate from expected patterns.

Statistical profiling

Statistical profiling analyzes the actual values in your data at three levels:

- Table level — Aggregate statistics across the entire table, such as row count and overall null rates.

- Field level — Per-column statistics, as shown in the Profile Metrics section. This is the default view in the Schema & Profiling tab.

- Segment level — Statistics for specific data groups. Use segmentation to profile groups independently, revealing quality issues hidden in aggregate views.

Metadata profiling

Validio also automatically profiles metadata at the dataset and table level, including:

- Read and write activity on the table.

- Tags from the source system or third-party tools such as dbt.

- Descriptions and ownership information imported from catalog tools such as Atlan.

Metadata profiling runs automatically and requires no additional configuration.

Running a Profile

To profile your source or catalog asset:

- Navigate to the Schema & Profiling tab.

- Click Profile data.

- Configure the profile settings in the dialog.

| Setting | Description |

|---|---|

| Sampling Percentage | (Only available for tables and materialized views.) Adjust the percentage of data to include in the profiling run. Lower values speed up profiling on large tables while still providing representative statistics. |

| Fields | Select specific fields to analyze. By default, all fields are profiled. |

| Row limit | Set the maximum number of rows to include in the profiling run. Use this to cap execution time on large datasets. |

| Partition field | (Advanced) Select a partition field to filter the data before profiling. This limits the profiling run to specific partitions rather than scanning the full dataset. |

| Partition lookback | (Advanced) Set the number of partitions to look back from the most recent partition. Use this with Partition field to profile only recent data. |

- Click Run to start the profiling run.

Results are displayed inline for each field once the run completes.

Profile Metrics

Profiling results provide metrics specific to the data type of each field:

| Metric | Description |

|---|---|

| Max | The maximum value (numeric fields) or maximum length (strings). |

| Mean | The average value (numeric fields). |

| Min | The minimum value (numeric fields) or minimum length (strings). |

| Null percentage | The percentage of rows with a NULL value for this field (all data types). |

| Unique Values | The number of unique values for this field (all data types). |

Additional profiling dimensions such as freshness, completeness, accuracy, and consistency are available through validators.

Interpreting Results

Profile results are shown alongside each field in the schema list. Use the results to:

- Identify fields with high null rates that may need attention.

- Spot unexpected value ranges or distributions.

- Verify that data types and field contents match your expectations before setting up validators.

- Inform which validators to configure based on observed data patterns. See Validator Recommendations for AI-assisted suggestions.

- Compare profiling results across subsets to detect inconsistencies in specific data segments.

Updated about 19 hours ago